Topic 21 | Introduction to Tensorflow

AI Free Basic Course | Lecture 21 | Introduction to TensorFlow

The stage we so far have studied is called conventional

machine learning. In conventional machine learning, we work on features. In

other words, the algorithm of machine learning would not work directly in case the

raw data, image or unstructured data is given as an input. Conventional Machine

Learning algorithm means the algorithms like Linear regression algorithm,

Logistic regression algorithm, decision tree algorithm, random forest algorithm

and boosting algorithm. It is called conventional machine learning, as it can’t

process directly from the raw data. It is a fundamental limitation of Conventional

Machine Learning. We used sklearn, which is a well known library of conventional

machine learning. We discussed necessary techniques of data sciences in it. Furthermore, we did exploratory data analysis of structured data, that how can we clean it, how

can we transform it to enable the model processing it to produce better

results.

We first studied regressors

which predicted scores and then in order to evaluate the score we discussed root

squared errors measures, mean squared errors measures. Likewise, we have seen that these

used the structured data or features as input.

We then discussed different classifiers

and their made their comparisons that which classifier is performing better

than the other.

In our last lecture we discussed

evaluation matrix like confusion matrix which is base through which other matrix

like accuracy, precision and recall evolve. FM measure on which you have to

make research.

We spent much time over EDA in which

we visualize data, involve in cleaning and scaling data. We do a lot of preprocessing

on data in order to make it suitable for the model to work on it for desired output.

After Conventional Machine Learning,

we are going to discuss the other branch of Machine Learning that is called Deep

Learning.

In deep learning, the job which

have been performed by regressors and classifiers so would now be performed by

Neural Networks.

So the branch of Machine Learning

in which we make use of Neural Networks in order to perform different tasks is

called Deep Learning.

What is Neuron. Neuron is the unit of

calculation in our brain. Our brain consist of billions of neurons which

collaborate with each other in order to perform the task. The idea of Neural

Network has evolved from Biology.

A natural question arises in our

mind that if the neurons can do computations, then why these computations could

not be done by computer. Why the technique used by neurons to perform

computation should not be used in artificial intelligence to do the same. This idea

started working on since 1960. So the Deep Learning is not the new thing.

Our brain always makes decision. If

you have to decide that the picture is of bus or car. In order to make this decision,

our brain needs input. We need the brain to give input in the shape of an image to

make a decision.

From the voice of the person as

input, we can not only recognize the person but can also recognize the tone of

voice, whether it is angry, happy or high or low.

The techniques or powers of the neurons

in our brain used to identify or perform multiple tasks when applied to computers

take the shape of artificial intelligence. The function of neurons when

manipulated or programmed in computer becomes artificial neuron and the network

so formed is called neural network.

By making use of network of neurons

in our brains we perform multiple tasks like watching, listening and speaking. The

neural network in our brain is an advance form of neural network, but the neural

network used in computers has not yet made such advancements to perform

multiple tasks. It can only identify the voice of a person or image of a person at

one time. These two tasks could not be performed by artificial neural network

simultaneously. The advancement in the field is being made, which will be

studied accordingly.

There are a lot of similarities in our brain and artificial neural network.

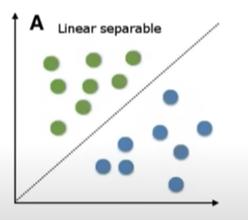

As discussed earlier, that our

machine learning models fail to perform after a certain limit. For example, the

model we studied were linear models. The data given was in binary form which

was separated through a line as in fig A above. The line which separates the

two types of data is called decision boundary. This line helps to identify

about the location or area of point A and Point B. It means that when the data

is in simple form, the linear models easily segregate the data.

When the pattern in the data is

complex as given in the figure. The data given could not be segregated between

point A and B through a straight line.

The data in the diagram has been classified

in circular pattern. So it means we are shifting towards nonlinear technique to

classify our complex pattern data. In complex data, we will not be able to identify the pattern of data and process it so that the model could use it to train itself.

It means we have to adopt such

techniques through which we not only get rid of data preprocessing but make responsibility

of model to identify the complex pattern in the data and decide whether there

is need to draw a circle or oval in order to classify the data into different classes.

The diagram given above is

showing the big difference between the Machine Learning and Deep Learning.

In machine learning there is

existence of a human which describe the image of car and converts it into

structured data enabling model to understand it.

In order to ease the process and avoid

the hectic job of converting the data of millions of cars into structured data,

in deep learning we shifted the job of converting the data into machine learning

language to the model itself.

In deep learning the network of

neurons is accepting the image, processing it and giving out put on basis of

it.

As it is not possible to shift

the advance neurons of brain into computer, so the simple functions of maths were

named as neurons. For example, you have to identify the larger number if two

number of given to you. The Max Function called Relo Neuron can do this job and

it was named as neuron. You would not believe it is the one of popular neurons

used in AI. So the neurons are the simple functions of mathematics. So in neural

network we adjust Relo Type functions into different layers which process the

input and give us the output.

Comments

Post a Comment